Table of contents

How Base increased overall AI traffic by 48,6% with Chatbeat

Results at a glance

- 1 Stronger overall AI visibility: Brand Score 50% → 65% in ~2 months

- 2 Better placement in AI answers: average position 8 → 5; median position 6 → 3

- 3 Competitive jump: moved from #4 to #2 among competitors

- 4 AI visibility → real traffic: website sessions from Gemini up 12×; from Copilot up 60×

- 5 Overall AI traffic: 48,6% increase

Key takeaways

-

Base approached AI visibility as both a growth channel and a brand proof-point

The goal was to be the platform AI models treat as an authority, not just a name that occasionally appears.

-

The work started with a baseline audit and ongoing monitoring

They did it to answer the fundamentals: is Base showing up in AI answers, which pages get cited, and how that compares to competitors.

-

Content was optimized for “citable authority"

How? With deeper original pages, list-friendly formats (rankings/comparisons), credible external references, and FAQ structure + schema for easy extraction.

-

The impact showed up where it matters

Stronger AI visibility, a clear competitive lift, and traffic growth from AI platforms – without relying on vanity-only metrics.

About Base

Base is an e-commerce sales management platform trusted by over 30,000 companies to power their global operations. Designed for teams selling across multiple channels, it centralizes order processing, product management, marketplace listings, shipping, and automation into a single interface.

The platform further optimizes workflows with specialized tools for analytics, WMS, PIM, repricing, and AI, while offering an expansive ecosystem of 1,700+ integrations – including major marketplaces, online stores, accounting software, and carriers.

Featured in this case study:

Karina Kondraciuk – Senior SEO Specialist at Base. She leads the brand’s organic visibility strategy, leveraging her extensive background in SEO across e-commerce and service sectors. Currently, she connects technical optimization with content strategy, expanding the brand’s reach into AI search via Generative Engine Optimization (GEO).

Challenge

When AI started dominating the conversation, the Base team treated it as a new customer acquisition channel – but the motivation wasn’t only performance-driven.

They also had a very “brand” goal: prove that AI models see them as an authority and actually use their content as a trusted source.

They started working on AI visibility in May 2025, with two core challenges in mind:

- Understand AI user intent: what people really ask in AI tools – and which questions Base wants to be the default answer for.

- Stop operating in the dark: without hard data, it’s almost impossible to tell whether content updates and onsite improvements are actually changing visibility in model answers.

Strategy

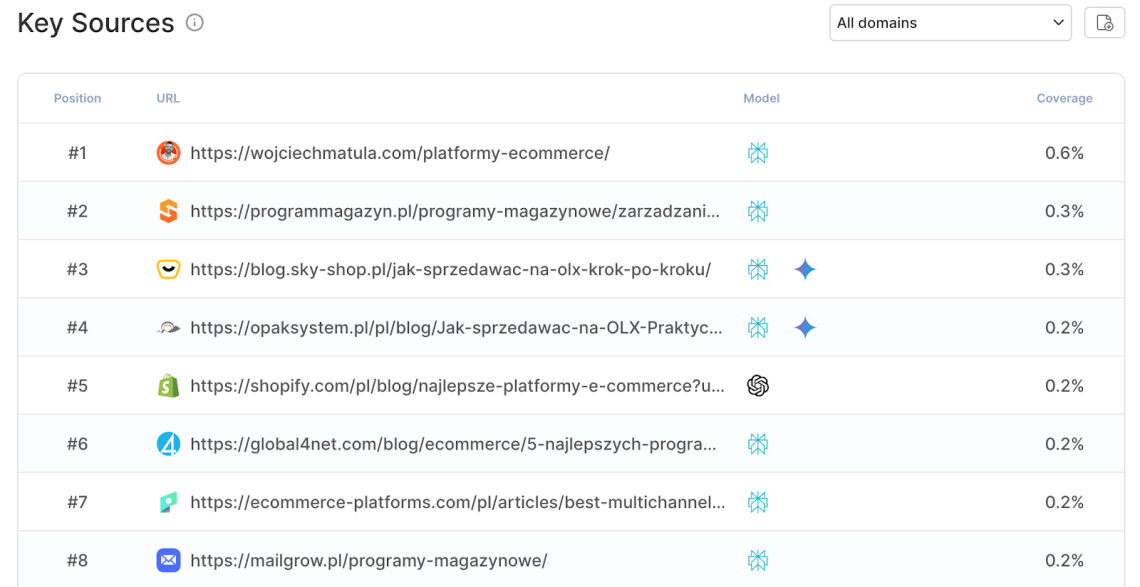

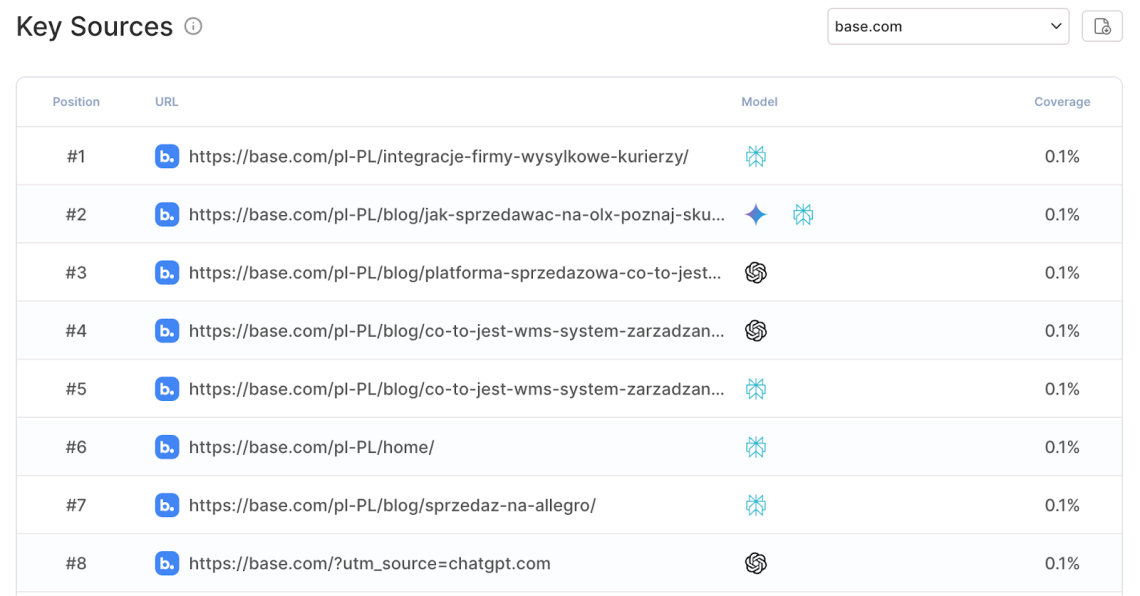

1. Monitor & diagnose – “Are we visible to LLMs at all?”

They started with a baseline, not assumptions:

- Verified whether the site is accessible to LLMs and whether the content is actually visible

- Checked whether they show up in AI answers and in sources, and how that compares to competitors.

Where Chatbeat fit: one dashboard + the Sources view to confirm: do we appear, who appears instead, and are the metrics trending up.

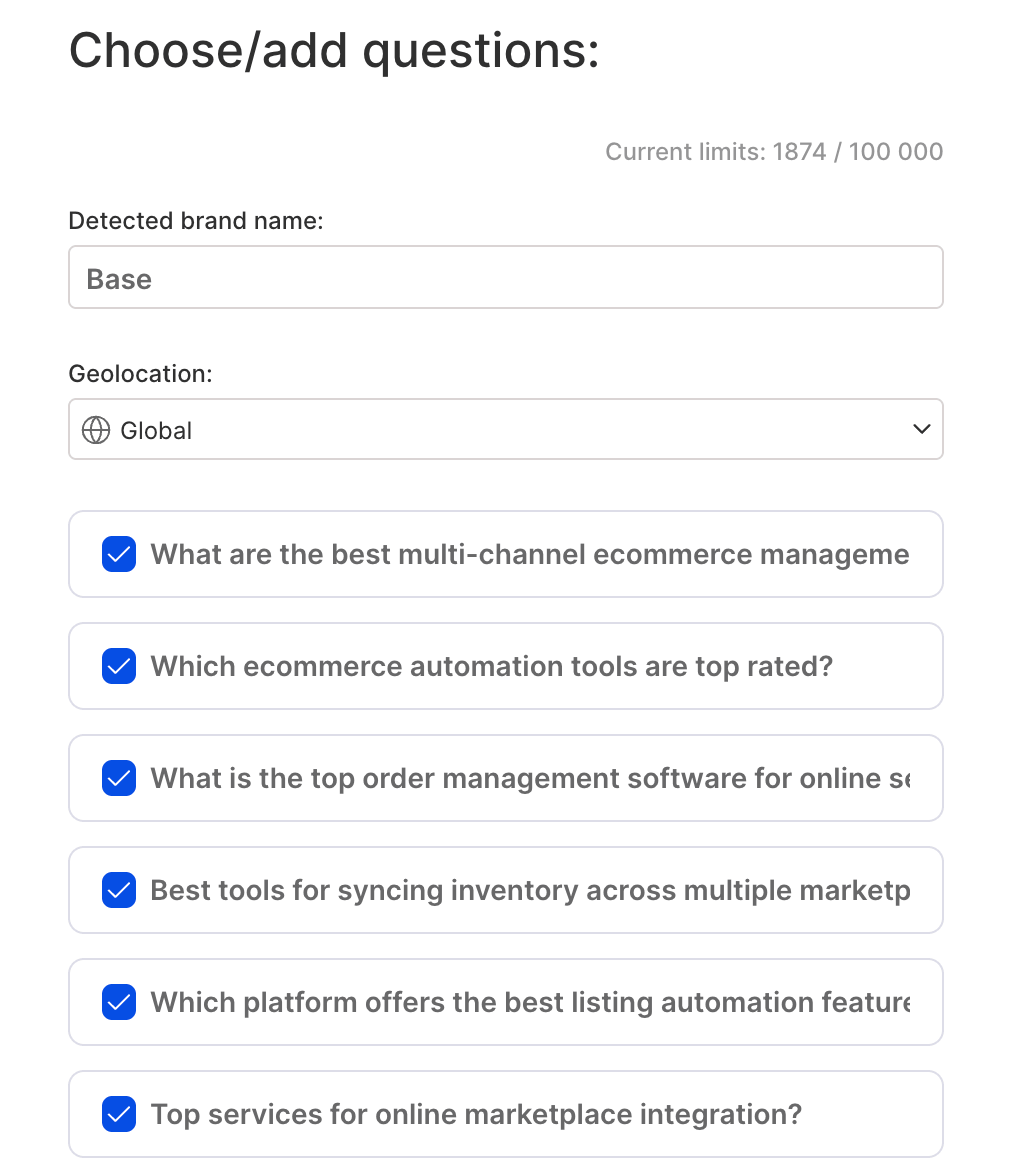

2. Map prompts – from broad questions to product-driven branches

The early challenge was twofold: what users really ask and which questions they want to win. Their process:

- Started with broad category prompts like “which e-commerce management system should I choose?”

- Broke them into topic branches

- Built prompt sets around their product’s functions, so monitoring aligned with what they could credibly answer.

Where Chatbeat fit: suggested prompts were a major accelerator when finding the right prompts was difficult.

3. Optimize for “citable authority” – content, formats, and credibility

They identified the biggest lever as how content is written and made it easier for models to trust and reuse:

| Optimization lever | What they did | Why it matters for LLM visibility |

|---|---|---|

|

1

Deep, original content |

Published in-depth, unique pages that answer real user questions |

Increases “authority density” and gives models stronger material to reuse and cite |

|

2

Rankings & comparisons |

Added rankings/comparisons after spotting that monitored prompts often trigger list-style answers |

LLMs frequently respond in lists – these formats fit the output structure and get pulled more easily |

|

3

Trusted external sources |

Backed claims with credible third-party references |

Improves trustworthiness when models evaluate which sources to rely on |

|

4

FAQ + structured data |

Rolled out FAQ sections across most subpages (natural language, expert tone) and implemented FAQ schema |

Makes answers easier to extract and improves machine-readability for Q&A-style queries |

|

5

llms.txt |

Implemented llms.txt highlighting product functions, key pages, and selected “preferred source” content; saw Gemini positions become more stable and consistently high afterward. |

Helps steer models toward the right pages and can reduce volatility in product-focused prompts. |

Where Chatbeat fit: visibility + sources monitoring became the feedback loop for iteration.

Results

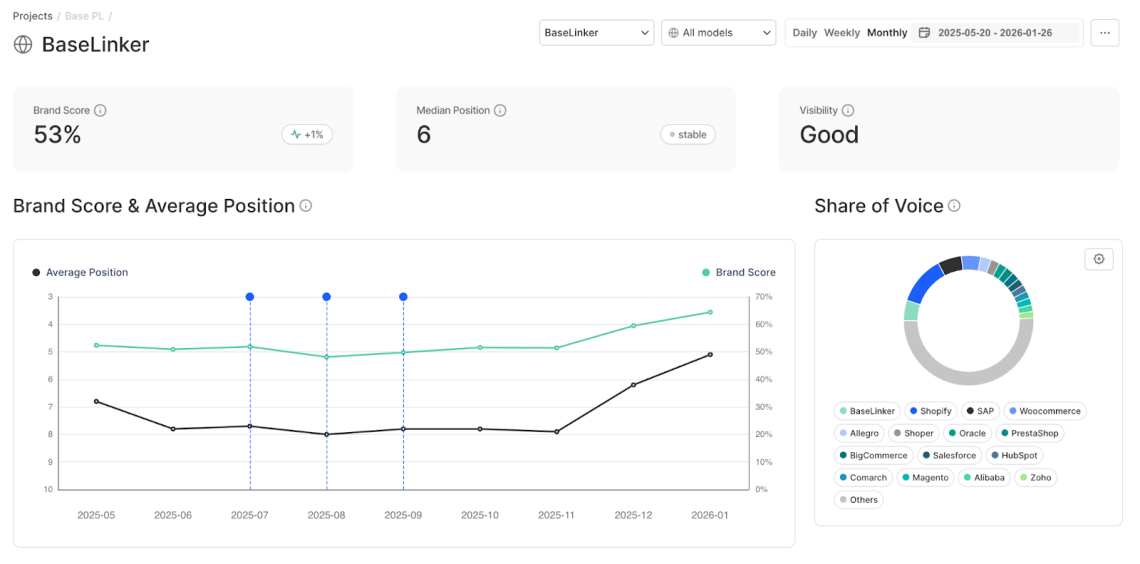

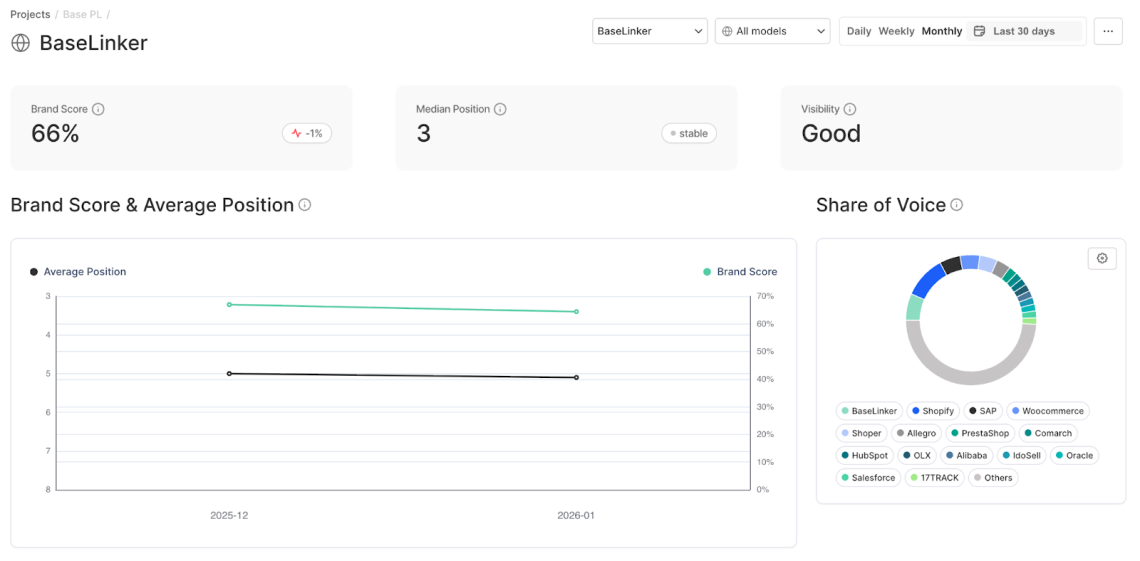

1. Stronger overall AI visibility

Base achieved a clear uplift in AI visibility across monitored models (as of Jan 26, 2026)

- Brand Score: 50% → 65% in ~2 months

- Average position in LLM answers: 8 → 5

- Median position: 3 (up from 6 in October)

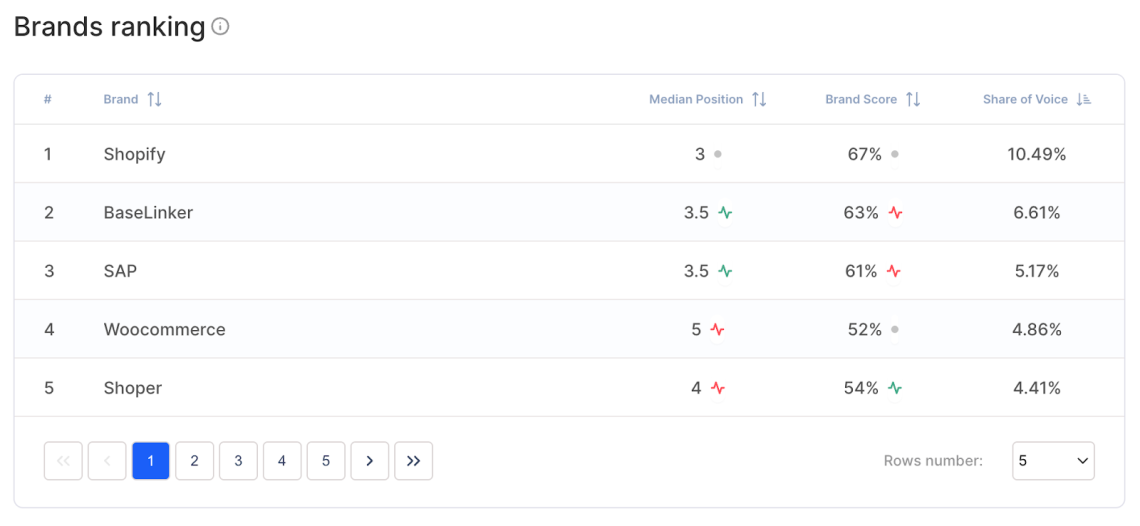

2. Competitive leap

Base moved from a steady #4 position (pre-December) to #2 among competitors in the last 30 days – turning AI visibility into a real competitive advantage, not just incremental progress.

3. Visibility → traffic (real sessions, not vanity metrics)

The growth wasn’t limited to “scores” inside tools – it translated into site visits from AI platforms:

- Gemini: 12× more sessions (May → December)

- Copilot: 60× more sessions (May → December)

- Overall AI traffic: 48,6% higher at the November peak vs. May

Takeaway for other brands

We asked Karina what other brands can do to replicate Base’s results. Here’s her practical playbook:

- 1 Treat it like SEO: it’s a marathon, not a one-off campaign. Consistency wins.

- 2 Start with monitoring + a prompt map: know what people actually ask in AI tools – and where you want to “enter” the answers.

- 3 Authority comes from substance: expert, original content supported with FAQs and trusted external sources (especially if you don’t have your own research to cite).

- 4 Double down on LLM-friendly formats: rankings, comparisons, checklists, definitions, well-described visuals/infographics, and video content (e.g., YouTube).

Don’t neglect SEO fundamentals: models pick up on them – and your AI visibility tends to rise with classic SEO growth.

Related articles

Top Reads

X (Twitter) Analytics Tools: The 12 Best to Try in 2026

How to See How Many Times a Hashtag Was Used on X (Twitter)

Brand Monitoring: Tools & Guide for 2026

Brand Awareness Strategy [The Ultimate Guide for 2026]

The Best AI Hashtag Tracker and Other Hashtag Tracking Tools [2026]

Social Media Reach: How to Measure & Improve It in 2026?

Sentiment Analysis: What is it & Why do You Need it in 2026?

Share of Voice: Definition, Calculation, Tools [2026 Guide]

Brand Reputation Management: 6 Expert Tips for 2026

Social Media Analysis: Complete Guide for 2026

Start Social Listening!

Get the Brand24 trial and start social listening like a PRO.