Table of contents

How Worksmile Increased AI Visibility by 27% with Chatbeat

Results at a glance

- 1 From #17.3 → #10 for an HR related prompt (Q2 → last 30 days, 22 Jan 2026) – +4.7 positions (27% increase) by Q4 2025.

- 2 They took over Key Sources: from 1 Worksmile source in Top 10 (#5) to #1 in citations + 3 sources in the Top 10 (Q4 2025).

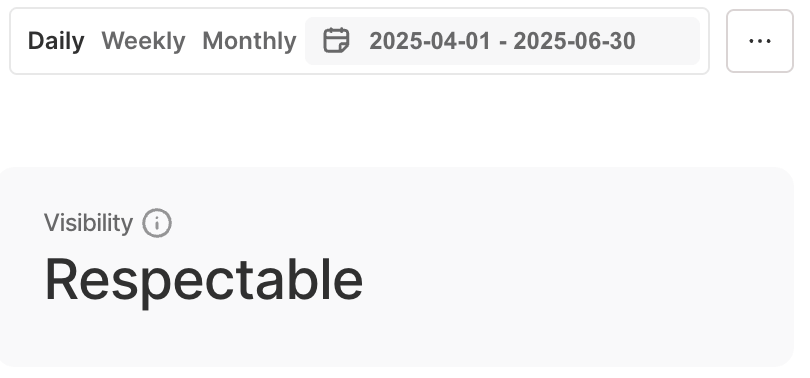

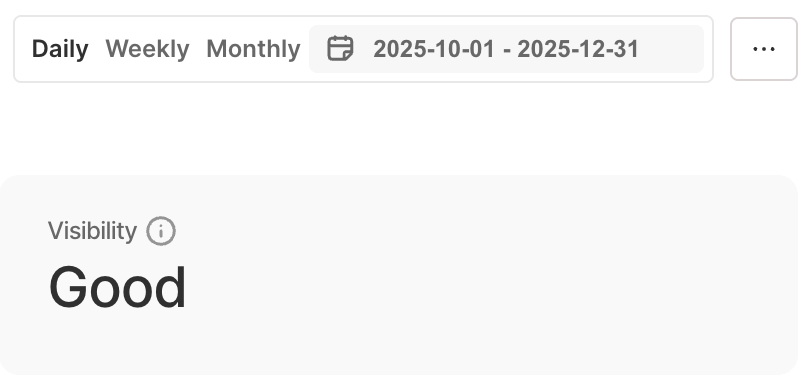

- 3 Visibility jumped a tier: “respectable” → “good” in just a few months.

Key takeaways

-

AI answers are already shaping how HR teams discover tools

Worksmile saw their brand story being rebuilt there, sometimes accurately, sometimes drifting based on outdated and third-party sources.

-

Worksmile used Chatbeat to make that invisible layer measurable

They used it to track the questions that matter, compare how models describe them, and see exactly which sources steer the narrative.

-

With those insights, they moved from “fixing copy” to fixing inputs

This helped them to reduce legacy rebrand baggage online and strengthening HR/internal communication messaging where models were weakest.

-

The result is a repeatable loop that keeps improving over time

Monitor → act → validate – keeping Worksmile’s positioning consistent across models as the ecosystem shifts.

About Worksmile

Worksmile is an HR platform for the entire organization. It addresses the challenges faced by HR teams and managers while also taking care of every employee’s experience — it automates processes, streamlines communication, and supports benefits management in one place.

Featured in this case study:

Magdalena Radecka – Head of Marketing at Worksmile. At Worksmile, she leads the team responsible for demand generation and brand awareness enhancement.

Challenge

Worksmile realized that their “brand story” wasn’t being told in one place anymore – it was being reconstructed inside LLM answers. And when HR and People teams asked seemingly simple questions, the output wasn’t reliably aligned with how Worksmile wanted to be understood.

Sometimes the description was accurate; other times, the HR context came out vague, or models leaned on third-party and outdated sources, so the narrative drifted depending on the prompt and the model.

The real problem wasn’t one incorrect answer – it was the lack of visibility and control:

- no way to see which prompts were drifting,

- no way to understand which sources were shaping the response,

- no clear path to improving rankings and becoming the source that AI systems actually cite.

Worksmile needed to make “AI visibility” operational – something they could track, fix, and prove with movement in positions and citations.

Solution

Worksmile used Chatbeat as a continuous AI visibility layer – tracking how different models answer specific questions, what sources they cite, and where the brand’s message diverges across prompts.

That created a simple feedback loop:

Prompt monitoring → narrative gaps → content actions → re-check rankings and citations

What Chatbeat gives Worksmile in practice

1. Reputation control in LLM responses

Before Worksmile became an employee experience and HR platform, it was known as Fitqbe, focused on well-being and benefits.

Since then, they moved into other areas, but because of that history, LLMs were sometimes misleading in how they described the brand, relying on data from before the rebrand.

Worksmile wanted to monitor how language models describe the brand – and respond immediately if the narrative starts drifting away from their desired positioning.

Key insight from Chatbeat: LLMs handled describing Worksmile as a benefits platform well, but they “understood” the HCM and internal communications parts worse. The team quickly linked this to the brand’s history and the presence of archived materials online, which still influence how AI “sees” Worksmile.

Action:

Based on that, they created a concrete action plan:

- reduce the exposure of well-being-related content (e.g., by hiding some subpages and scenarios),

- put more emphasis on content about internal communication and HR processes,

- distribute and promote content outside the website (e.g., running ads on new platforms like Bing)

Result:

The team is already seeing the first shift in AI responses toward internal communication and engagement.

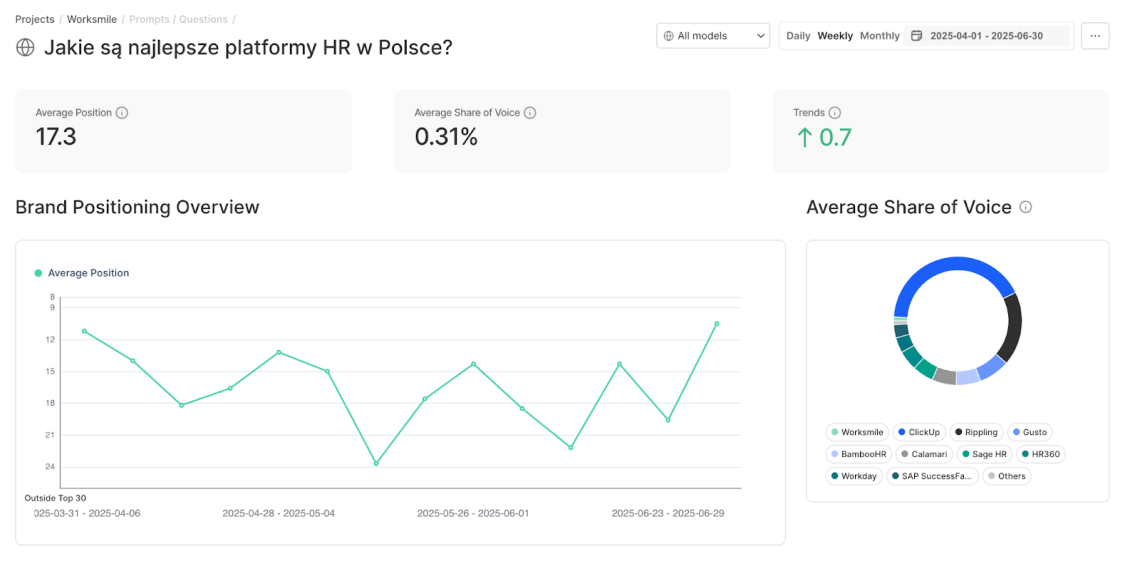

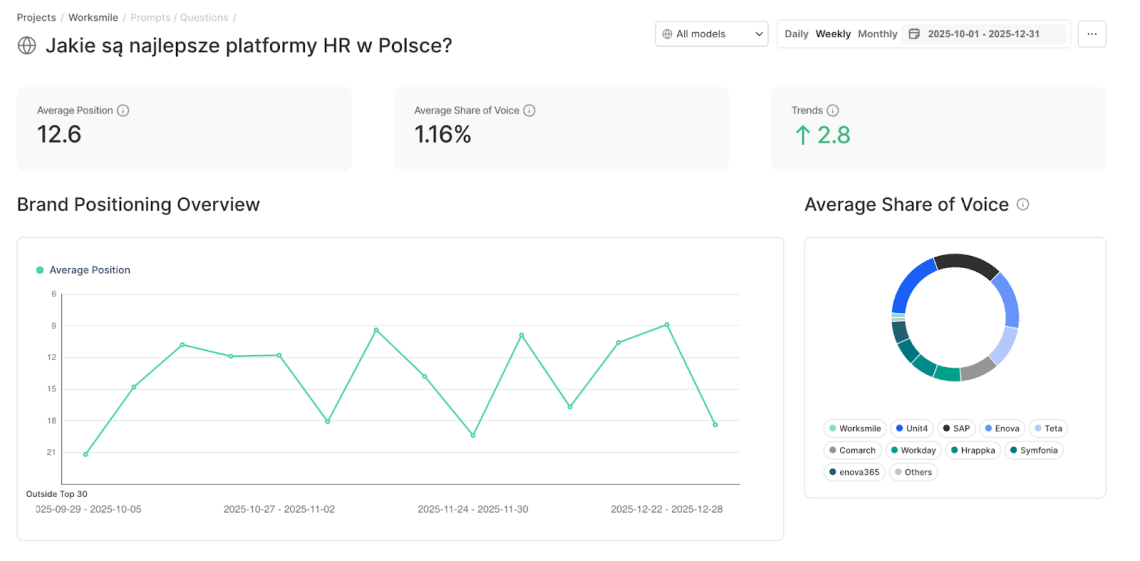

The comparison below is between Q2 (when Worksmile first started exploring AI) and Q4 2025 for one of the HR area prompts.

- Q2: 17.3 (Worksmile begins exploring AI)

- Q4 2025: 12.6 (+4.7 positions) -> 27% increase

- Last 30 days (as of 22 Jan 2026): 10th place

The next step is to verify progress in a few months by adding more “HCM-heavy” questions to the monitoring and checking how the models’ positioning changes over time.

2. Building an LLM-Ready Content Strategy

Worksmile didn’t stop at reputation and narrative control in LLMs – they also wanted their own content to become the primary source models cite when answering questions about the brand and industry.

Instead of treating “LLM visibility” as a lucky side effect of good marketing, they approached it like a channel: measurable, testable, and planned.

Key insight from Chatbeat: By checking Key sources for prompts they saw which sources are cited most and could examine why. They saw which sources from their own domain being cited and how much share of voice they have and they could work on increasing their share of voice.

Action:

From there, they moved from intuition to a repeatable system, using positioning insights to make research actionable:

- identify which LLMs rank them well vs. weakly

- pinpoint which formats and publication channels improve visibility

- translate learnings into a content map (format → channel → target LLM)

- use Chatbeat insights to verify hypotheses and iterate

Results:

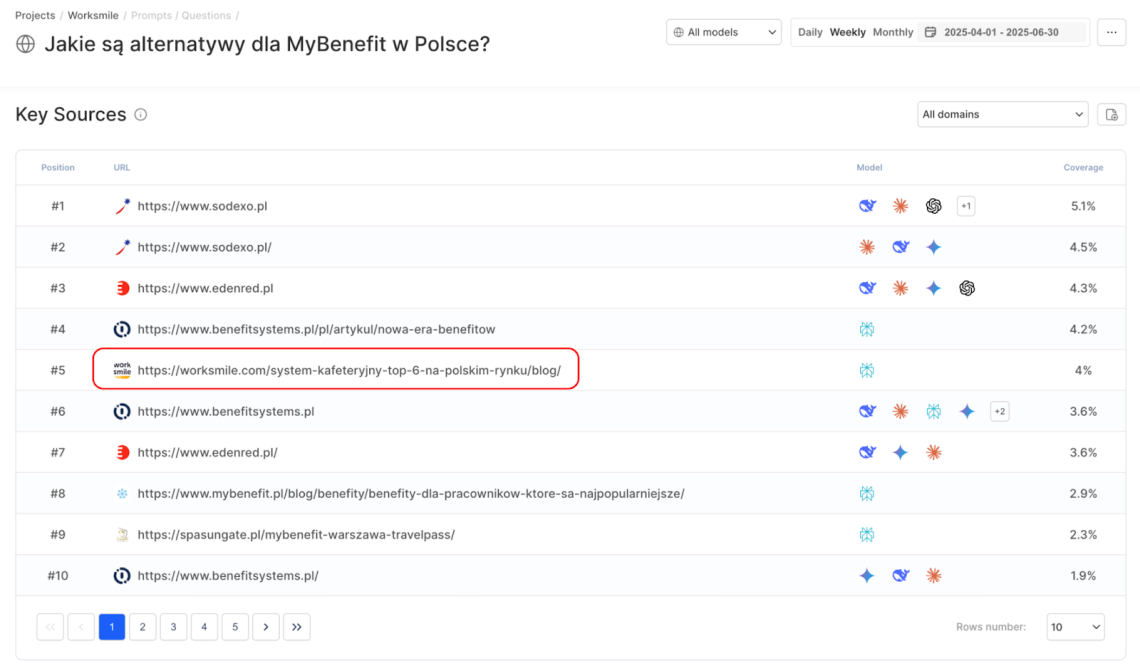

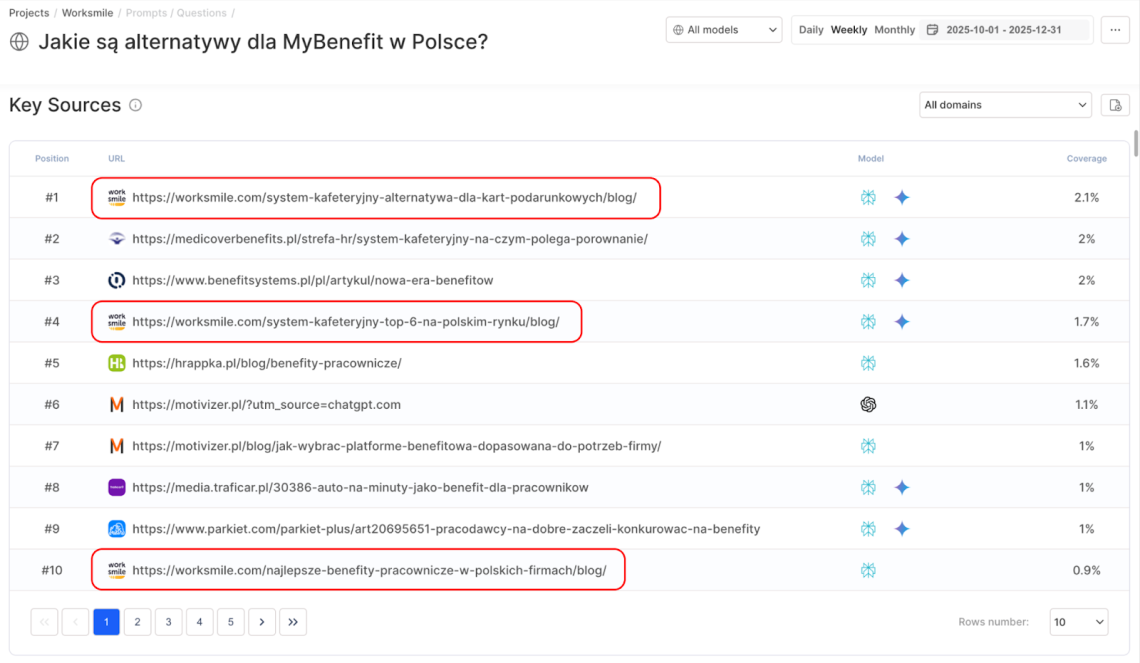

Quarter-over-quarter shift (citations for a selected prompt):

- Q2 2025: only 1 Worksmile source appeared in the Top 10 sources, and it ranked #5.

- Q4 2025: Worksmile reached #1 in citations – and added 2 more sources into the Top 10 key sources (screenshots below).

LLM content playbook

Want to improve how often your brand gets cited by LLMs using content? Here’s what Worksmile did – and what actually worked.

| Area | What they did | Why it matters for LLM visibility |

|---|---|---|

|

1

Source channels |

Kept investing in the blog and expanded YouTube publishing, especially HR-helpful content |

Many LLMs still rely heavily on SEO-indexed sources; YouTube is increasingly influential |

|

2

Community platforms |

Tested Reddit and Quora (mixed results); continued efforts around Wikipedia presence |

Some ecosystems can help, but require strong fit + maintenance; Wikipedia can shape “entity understanding” |

|

3

Search engine focus |

Started targeting Bing for brand and product phrases (e.g., “benefits platform”) |

Bing visibility can translate into stronger representation in certain LLM answers |

|

4

Authority distribution |

Prioritized expert-signed content and partnerships (projects, webinars, appearing on experts’ channels) |

LLMs reward credible authorship and recurring expert associations |

|

5

Formats |

Built a calendar for Q&A, Top X lists, how-tos, feature lists, and direct answers |

These formats are easier for models to parse, quote, and reuse accurately |

|

6

Topic coverage |

Planned more product content for HR process gaps |

Improves depth where the model previously “hallucinated” or under-explained |

3. Performance benchmarking and KPIs

Worksmile also needed a numeric benchmark to make sure they’re not falling behind – and to prioritize effort where it will move the needle fastest.

Key insight from Chatbeat: They could track visibility/brand score as a high-level health metric and combine it with median position as a more actionable KPI – broken down per LLM and compared against competitors (e.g., MyBenefit, PeopleForce).

This revealed two things:

- they were generally doing well vs. most competitors

- one competitor consistently ranked higher, which shifted their focus from “more visibility at any cost” to improving message quality and correctness – teaching LLMs to describe them the way they want.

Action:

With per-model KPIs in place (e.g., move from #3 to #2 in ChatGPT by mid-year; +1 in Perplexity; +2 in Claude and Gemini; reach “excellent” visibility by year-end), they used the benchmark to drive concrete marketing decisions:

- prioritize improvements where performance was weakest (model + prompt set)

- test channels that influence LLM sourcing (e.g., Bing, YouTube, Wikipedia/Wikidata)

- run experiments in additional platforms (Quora/Reddit)

Result:

In a few months – not years – they moved their overall visibility rating from “respectable” to “good”, and turned LLM visibility into a measurable system that directly informs marketing priorities and OKRs.

Takeaway for other brands

Worksmile’s approach was effective and repeatable:

- 1 Check your current state: see how your brand is described in each LLM (answers can differ a lot model to model)

- 2 Pick your focus: decide if you want visibility in all LLMs, or only in the ones that matter most for your business.

- 3 Set a business goal: define what you’re trying to achieve (e.g., correct positioning, better rank for key prompts, more citations to your own sources).

- 4 Match actions to the goal: plan content and distribution based on what each model tends to use and cite.

- 5 Review and adjust regularly: the landscape changes every quarter – this isn’t a “forever plan.” Re-check and update the strategy (e.g., every 6 months).

Related articles

Top Reads

X (Twitter) Analytics Tools: The 12 Best to Try in 2026

How to See How Many Times a Hashtag Was Used on X (Twitter)

Brand Monitoring: Tools & Guide for 2026

Brand Awareness Strategy [The Ultimate Guide for 2026]

The Best AI Hashtag Tracker and Other Hashtag Tracking Tools [2026]

Social Media Reach: How to Measure & Improve It in 2026?

Sentiment Analysis: What is it & Why do You Need it in 2026?

Share of Voice: Definition, Calculation, Tools [2026 Guide]

Brand Reputation Management: 6 Expert Tips for 2026

Social Media Analysis: Complete Guide for 2026

Start Social Listening!

Get the Brand24 trial and start social listening like a PRO.